AI

Nov 6, 2024

|

Read: 8 minutes

MangoByte Team

Business Digital Transformation | MangoByte

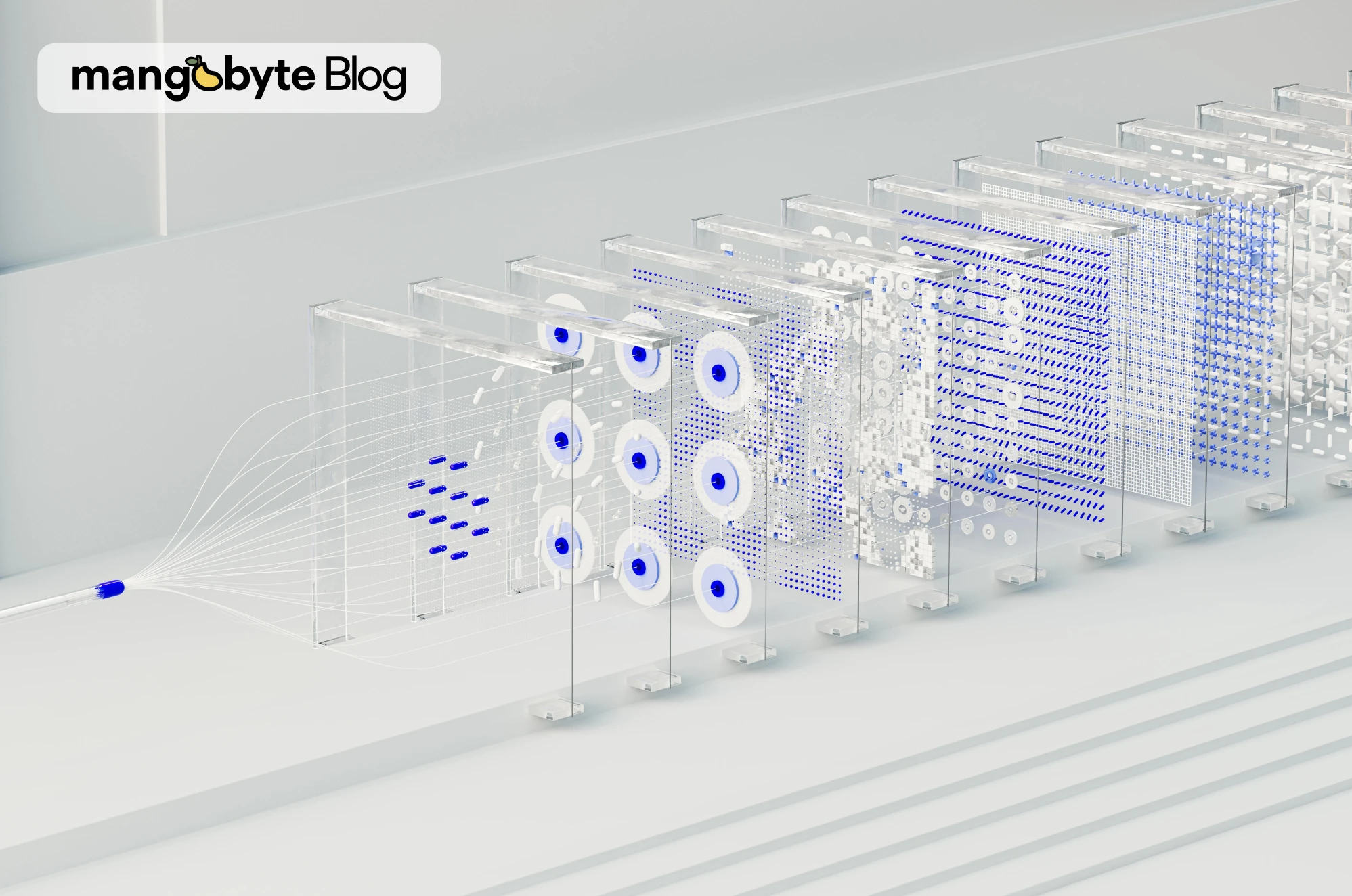

For an AI model to learn, it needs large amounts of data. During the training process, the AI repeatedly analyzes these data to identify patterns and extract knowledge that allows it to adapt to different contexts. This process is what enables an AI model to develop complex skills and solve problems accurately. Without data, AI couldn't offer useful results or adapt to new situations.

Organizing the necessary data to train AI models is a complex task that depends on several key components. These include Common Crawl, an open library that compiles large volumes of data from the internet; LAION-5B, a dataset created from this library to train AI models; and CLIP, a model that evaluates the relationship between images and text. Together, these elements form the foundation upon which AI “learns” and acquires knowledge of the world. However, their use also raises ethical dilemmas that deserve deeper reflection.

Common Crawl: The Internet Library

Common Crawl is a non-profit project that acts as a “public library” of the internet, collecting large volumes of publicly accessible data. Through an automated process known as web scraping, it scans millions of websites each month and saves their content: articles, blog posts, links to images, and other publicly available data. This allows Common Crawl to function as a massive archive of the web, used for research, trend analysis, and, in this case, training AI models.

The automated process of Common Crawl enables large-scale data collection without direct human intervention. However, this massive collection also accumulates potentially problematic content, such as personal information or explicit material. These risks, common in automated collection, are difficult to fully control with automatic filters, posing important challenges for ensuring responsible and ethical use of these data.

LAION-5B: A Dataset to Train AI

To leverage this vast raw database, LAION-5B emerges, a non-profit project that selects and structures the data from Common Crawl for AI model training. While Common Crawl acts as a general archive of the web, LAION-5B uses an automated process to identify images and their associated texts on web pages, forming image-text pairs.

The purpose of LAION-5B is clear: to teach AI models to relate images with words and phrases, allowing AI to “see” and “understand” visual content. To build a dataset of this scale, the creators of LAION-5B turned to an AI model called CLIP, which assigns a similarity score to each image-text pair to measure how well they relate. The final dataset includes only pairs that exceeded the minimum similarity value set by the LAION team, reaching a total of 5.8 billion pairs.

CLIP: Measuring Coherence Between Image and Description

CLIP is an AI model developed by OpenAI that assigns a relationship score between an image and a text, evaluating how well they match. Trained with millions of examples of images and descriptions, CLIP has learned to identify patterns and “understand” relationships between visual elements and words or phrases.

However, there is significant opacity in the internal workings of CLIP. Not only is the exact process by which CLIP calculates these scores a closed system, but there is also no detailed information on the specific data OpenAI used to train CLIP, nor on the exact criteria taught for identifying coherence. This means that users of CLIP, like the LAION team, rely on a model that assigns “relevance” ratings without clear visibility of the foundations that determine those criteria, introducing an ethical and practical dilemma.

To create the final dataset, the LAION team also had to define a similarity threshold, a minimum value from which a pair is considered sufficiently relevant to be included in the dataset. This decision becomes largely arbitrary and can have a considerable impact: there is no universal nor completely transparent criterion to define the ideal cutoff point. For example, in LAION-5B, a minimal change in the threshold from 0.26 to 0.27 can exclude up to 16% of the pairs, equivalent to over 937 million image-text pairs.

The lack of clarity about CLIP's training data and criteria, along with the need to make cutoff decisions based on an opaque system, raises important questions about the objectivity and accuracy of the resulting dataset. By relying on an arbitrary threshold in a closed system, the quality of datasets like LAION-5B becomes subject to interpretations that are difficult to audit. This opens a critical debate about the reliability of these data to train AI models on a large scale.

Ethical Dilemmas in the Collection of Public Data

The creation of datasets like LAION-5B raises ethical dilemmas that cannot be ignored, especially around privacy, consent, and cultural bias. Although Common Crawl collects publicly accessible data, many people who post online do not anticipate that their content may be used to train AI models. This raises the question: is it ethical to use “public” content for purposes like AI training without explicit consent? In a world where digital privacy is increasingly valued, the use of these data can undermine public trust in technology.

Another challenge is cultural and linguistic bias. Common Crawl predominantly extracts from English sites and Western domains, causing LAION-5B to inherit a limited perspective of the world. Over 60% of the data comes from Western sources, and 46% of the total content is in English, a figure that contrasts with the 20% of the world’s population that speaks the language. This bias means that models trained on LAION-5B may reflect a restricted cultural view, ignoring less represented cultural groups and realities. Additionally, these biases are amplified as models based on these data are deployed in global applications, generating a feedback cycle that perpetuates cultural prejudices and disadvantages.

The Risk of Harmful Content in Massive Datasets

One of the greatest risks in massive data collection is the inclusion of harmful and even illegal content, such as violent, explicit, or sensitive images, which can end up in the datasets due to lack of thorough review. This was the case with LAION-5B, which in March 2022 faced strong criticism when it was discovered that its dataset included not only inappropriate but also potentially harmful material for those using the model and the general public. The controversy was so serious that access to the dataset was temporarily withdrawn to implement additional controls and improve quality filters.

The fact that this type of content slipped through automated filters poses an urgent question: how reliable are automatic processes, like those of CLIP, in identifying and excluding sensitive material? And even more importantly, how much should we rely on them? In a dataset of the magnitude of LAION-5B, which includes 5.8 billion image-text pairs, manual review is simply impossible. It's estimated that if a person spent just one second reviewing each image for eight hours a day, five days a week, it would take approximately 781 years to cover the entire dataset. This figure not only reflects the monumental scale of the dataset but also underscores the constant risk of harmful content going unnoticed, even with automatic filters.

The inclusion of problematic content is not just a matter of “system errors”; it has a direct impact on society and public trust in AI. An AI model trained on problematic data can produce biased, offensive, or insensitive results and can normalize the treatment of inappropriate content as part of its “learnings.” In applications like image search engines or recommendation systems, users could be exposed to explicit material without warning, affecting the safety and reputation of AI and the organizations that develop it.

In response to this situation, LAION not only temporarily removed access to the dataset but also launched a revised version called Re-LAION in October 2022. This version implements advanced filters and has been developed in collaboration with organizations specializing in AI ethics and standards, such as Partnership on AI and Stanford University, to ensure that the filters are accurate enough to reliably detect inappropriate content.

Although Re-LAION-5B has implemented important measures to improve the safety and ethics of its content, no filtering system is completely foolproof. The detection and removal of inappropriate content in massive datasets remains a constant challenge, requiring continuous updates and adjustments. Re-LAION-5B represents progress toward safer datasets, but it does not guarantee the complete elimination of inappropriate content. Solving these problems depends not only on technological improvements but also on establishing regulations, audits, and a culture of responsibility in the use of massive data. This effort is just the beginning of a solution; to ensure ethical and reliable AI, rigorous control is needed at every step of its development.

The Takeaway

The creation of massive datasets like LAION-5B represents a significant step in the development of artificial intelligence. These datasets provide models with the information needed to perform complex tasks, such as recognizing images and understanding context, making AI increasingly useful and relevant across a wide variety of industries. However, the methods and decisions involved in building these datasets lead us to reflect on the ethical dilemmas of AI and how to balance innovation and responsibility.

Resolving these dilemmas is not simple. It requires both technological improvements that increase the transparency and diversity of the data and an ethical commitment within the AI community. The path to responsible AI is not just about perfecting models but about developing systems that respect and reflect the values of a diverse society. As we advance in this area, transparency, inclusion, and a commitment to ethics will be essential to ensure that AI provides value in a fair and reliable manner.

At MangoByte, we believe in the responsible and sustainable use of technology. Contact us today to develop customized solutions that reflect your values and promote a positive impact on your organization.